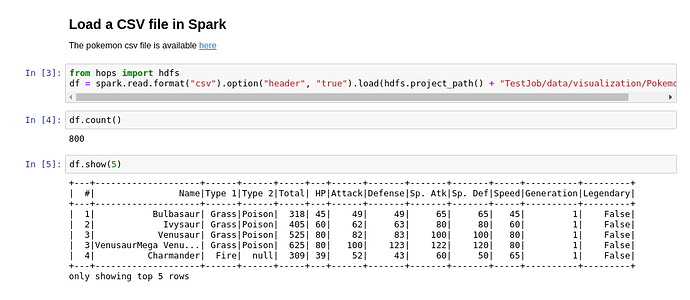

I look into image of the tutorial and found a code for pyspark (in this tutorial .)

However, I don’t know how to use in spark notebook. For example, my concern is how to import.

import ? ;

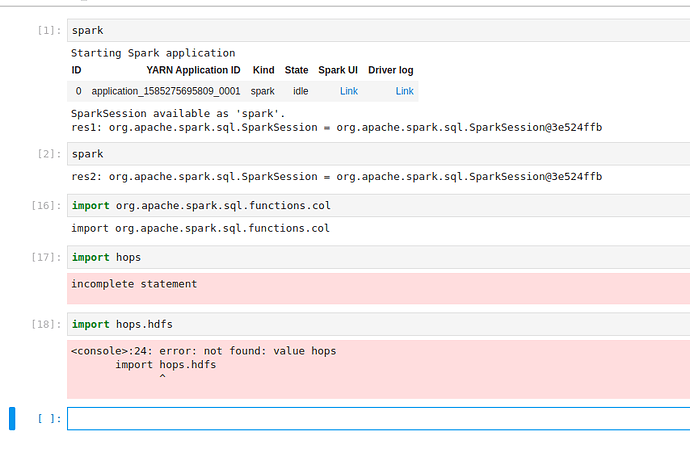

tried:

I really need documentation, with some code example (copy paste that run, include import statement)

I cannot reproduce the error you are getting. Are you running the Jupyter notebook inside Hopsworks?

We have many example notebooks that can be found here: hops-examples

You can also run the Deep Learning tour available on the Hopsworks start page which will provide multiple example notebooks in Jupyter.

that is scala notebook, not pyspark

In this case check out some of the Scala examples:

And the Scala version of our library for some more documentation: https://github.com/logicalclocks/hops-util