Hi Team,

Could any one please share us the code for Model Inference using API calls with URL, some python code similar to the following link:

We have a model serving run but unable to connect the URL

Sharing the code we tried

import requests, json

url = " http://20.190.71.208:443//hopsworks-api/api/project/120/models/Customer_Classification_1?filter_by=endpoint_id:120"

data = {“inputs” : [[533.2688889, 0, 1385.061, 0, 23.34242,0],[533.2688889, 0, 1385.061, 0, 23.34242,0]]}

r = requests.get(url)

print(r,r.text)

Thanks,

Guru

Hi Guru,

Here is example of sklearn model serving hops-examples/IrisClassification_And_Serving_SKLearn.ipynb at master · logicalclocks/hops-examples · GitHub. It will guide you how to setup all necessary services. If you start Deep Learning demo tour this examples will be available under the notebooks.

/Davit

Hi Davit,

We are expecting a direct API call rather using the standard inference endpoint.

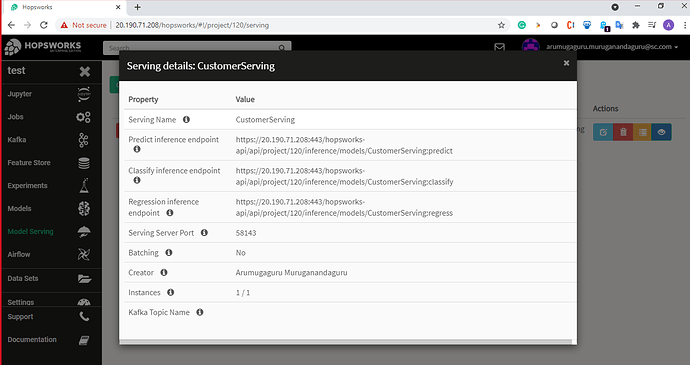

We are calling the REST API using the end point URL (https://20.190.71.208:443/hopsworks-api/api/project/120/inference/models/CustomerServing:predict) as mentioned in below screenshot

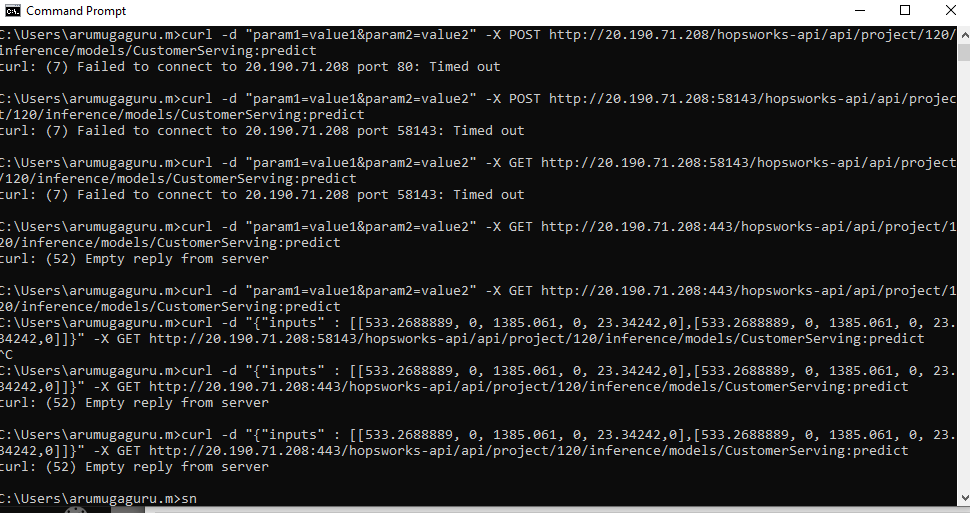

It always gives an error as “Timed out” if we use Port 58143 as per the screenshot, if we use port 443 (for HTTPS) we are getting error as “curl: (52) Empty reply from server”

Commands are using the call the API:

Using CURL:

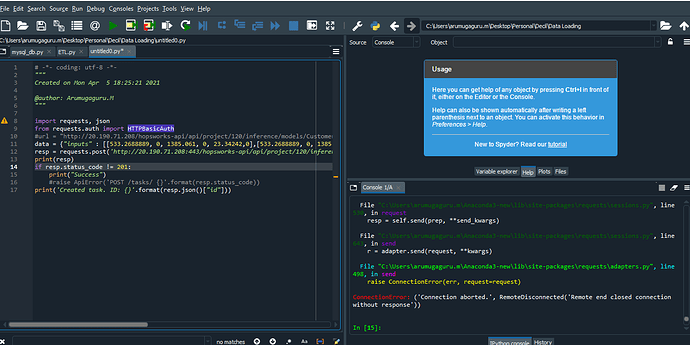

Using Python 443 Port:

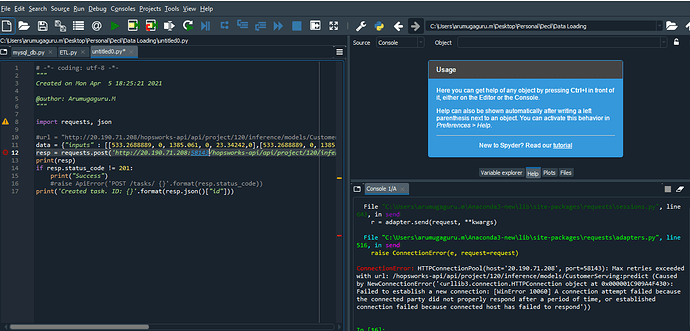

Using Python 58142 Port:

Please let us know if there is any port block in the demo server for 58143 (or) we are missing some parameters/ code

Thanks,

Guru