Hi,

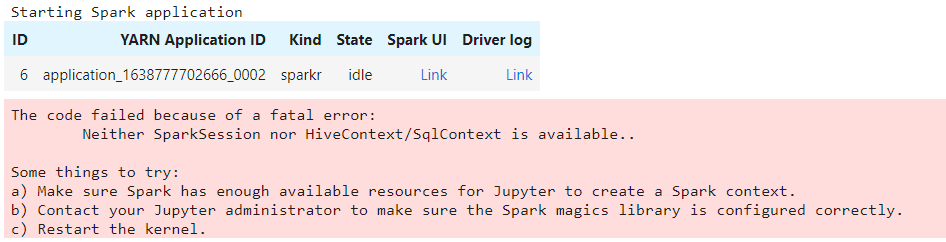

I’m facing an issue trying to use the SparkR kernel in jupyterlab. The kernel starts correctly (it is in the normal idle status) but when the pyspark job is initialized, an error occurs printing the following message:

By following the links to the YARN UI logs I can find this WARN message:

INFO testsparkr,jupyter,notebook,? PrometheusSink: role=driver, job=application_1638777702666_0002

WARN testsparkr,jupyter,notebook,? SparkRInterpreter$: Fail to init Spark RBackend, using different method signature

java.lang.ClassCastException: scala.Tuple2 cannot be cast to java.lang.Integer

at scala.runtime.BoxesRunTime.unboxToInt(BoxesRunTime.java:103)

at org.apache.livy.repl.SparkRInterpreter$$anon$1.run(SparkRInterpreter.scala:88)

2021-12-06 08:43:33,802 WARN testsparkr,jupyter,notebook,? Session: Fail to start interpreter sparkr

Cannot run program “R”: error=2, No such file or directory

at java.lang.ProcessBuilder.start(ProcessBuilder.java:1048)

at org.apache.livy.repl.SparkRInterpreter$.apply(SparkRInterpreter.scala:143)

at org.apache.livy.repl.Session.liftedTree1$1(Session.scala:107)

at org.apache.livy.repl.Session.interpreter(Session.scala:98)

at org.apache.livy.repl.Session.setJobGroup(Session.scala:358)

at org.apache.livy.repl.Session.$anonfun$execute$1(Session.scala:164)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.concurrent.Future$.$anonfun$apply$1(Future.scala:659)

at scala.util.Success.$anonfun$map$1(Try.scala:255)

at scala.util.Success.map(Try.scala:213)

at scala.concurrent.Future.$anonfun$map$1(Future.scala:292)

at scala.concurrent.impl.Promise.liftedTree1$1(Promise.scala:33)

at scala.concurrent.impl.Promise.$anonfun$transform$1(Promise.scala:33)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:64)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: error=2, No such file or directory

at java.lang.UNIXProcess.forkAndExec(Native Method)

at java.lang.UNIXProcess.(UNIXProcess.java:247)

at java.lang.ProcessImpl.start(ProcessImpl.java:134)

at java.lang.ProcessBuilder.start(ProcessBuilder.java:1029)

… 16 more

Searching for the error I found it could be an incompatibility between livy and Spark, what do you think about it? Any help is appreciated.

I’m using Hopsworks 2.3 on prem

Thank you, regards