I was trying to install Hopsworks 2.4. I am using the below YAML file

name: Hops

baremetal:

ips: [

]

sudoPassword: ''

username: azureuser

cookbooks:

hopsworks:

branch: '2.4'

github: logicalclocks/hopsworks-chef

attrs:

install:

cloud: none

kubernetes: 'false'

dir: /srv/hops

airflow:

mysql_password: 0cdeba4d_204

elastic:

opendistro_security:

epipe:

password: 0cdeba4d_201

username: epipe

logstash:

password: 0cdeba4d_201

username: logstash

audit:

enable_rest: 'true'

enable_transport: 'false'

jwt:

exp_ms: '1800000'

elastic_exporter:

password: 0cdeba4d_201

username: elasticexporter

admin:

password: 0cdeba4d_201

username: admin

kibana:

password: 0cdeba4d_201

username: kibana

hive2:

mysql_password: 0cdeba4d_203

mysql:

password: 0cdeba4d_202

hops:

tls:

crl_fetcher_interval: 5m

crl_enabled: 'true'

crl_fetcher_class: org.apache.hadoop.security.ssl.DevRemoteCRLFetcher

enabled: 'true'

yarn:

detect-hardware-capabilities: 'true'

pcores-vcores-multiplier: '0.66'

system-reserved-memory-mb: '4000'

cgroups_strict_resource_usage: 'false'

rmappsecurity:

actor_class: org.apache.hadoop.yarn.server.resourcemanager.security.DevHopsworksRMAppSecurityActions

prometheus:

retention_time: 8h

ndb:

TotalMemoryConfig: 4G

LockPagesInMainMemory: '0'

NoOfReplicas: '1'

NumCPUs: '4'

alertmanager:

email:

smtp_host: mail.hello.com

from: hopsworks@logicalclocks.com

to: sre@logicalclocks.com

hopsworks:

kagent_liveness:

threshold: 40s

enabled: 'true'

featurestore_online: 'true'

admin:

password: 0cdeba4d_201

user: adminuser

application_certificate_validity_period: 6d

requests_verify: 'false'

encryption_password: 0cdeba4d_001

https:

port: '443'

master:

password: 0cdeba4d_002

groups:

metaserver:

size: 1

baremetal:

ips:

- 10.0.0.10

sudoPassword: ''

attrs: {

}

recipes:

- ndb::mgmd

- elastic::default

- hopslog::_filebeat-services

- hops::dn

- flink::historyserver

- hopslog::default

- conda::default

- hopsmonitor::default

- hadoop_spark::yarn

- hopslog::_filebeat-serving

- hops::docker_registry

- hops::ndb

- hops::nn

- onlinefs::default

- hops::rm

- ndb::mysqld

- hive2::default

- livy::default

- hops_airflow::default

- hops::jhs

- kkafka::default

- epipe::default

- tensorflow::default

- hopslog::_filebeat-spark

- hadoop_spark::historyserver

- hopsmonitor::node_exporter

- kzookeeper::default

- flink::yarn

- hopsmonitor::prometheus

- kagent::default

- hopslog::_filebeat-jupyter

- hopsmonitor::alertmanager

- hopsworks::default

- ndb::ndbd

- consul::master

worker0:

size: 1

baremetal:

ips:

- 10.0.0.10

sudoPassword: ''

attrs:

cuda:

accept_nvidia_download_terms: 'false'

hops:

yarn:

pcores-vcores-multiplier: '1.0'

system-reserved-memory-mb: '750'

recipes:

- tensorflow::default

- hadoop_spark::yarn

- hopslog::_filebeat-spark

- hopslog::_filebeat-services

- hops::nm

- kagent::default

- hopsmonitor::node_exporter

- hops::dn

- consul::slave

- livy::install

- conda::default

- flink::yarn

worker1:

size: 1

baremetal:

ips:

- 10.0.0.11

sudoPassword: ''

attrs:

cuda:

accept_nvidia_download_terms: 'false'

hops:

yarn:

pcores-vcores-multiplier: '1.0'

system-reserved-memory-mb: '750'

recipes:

- tensorflow::default

- hadoop_spark::yarn

- hopslog::_filebeat-spark

- hopslog::_filebeat-services

- hops::nm

- kagent::default

- hopsmonitor::node_exporter

- hops::dn

- consul::slave

- livy::install

- conda::default

- flink::yarn

worker2:

size: 1

baremetal:

ips:

- 10.0.0.12

sudoPassword: ''

attrs:

cuda:

accept_nvidia_download_terms: 'false'

hops:

yarn:

pcores-vcores-multiplier: '1.0'

system-reserved-memory-mb: '750'

recipes:

- tensorflow::default

- hadoop_spark::yarn

- hopslog::_filebeat-spark

- hopslog::_filebeat-services

- hops::nm

- kagent::default

- hopsmonitor::node_exporter

- hops::dn

- consul::slave

- livy::install

- conda::default

- flink::yarn

worker3:

size: 1

baremetal:

ips:

- 10.0.0.13

sudoPassword: ''

attrs:

cuda:

accept_nvidia_download_terms: 'false'

hops:

yarn:

pcores-vcores-multiplier: '1.0'

system-reserved-memory-mb: '750'

recipes:

- tensorflow::default

- hadoop_spark::yarn

- hopslog::_filebeat-spark

- hopslog::_filebeat-services

- hops::nm

- kagent::default

- hopsmonitor::node_exporter

- hops::dn

- consul::slave

- livy::install

- conda::default

- flink::yarn

worker4:

size: 1

baremetal:

ips:

- 10.0.0.14

sudoPassword: ''

attrs:

cuda:

accept_nvidia_download_terms: 'false'

hops:

yarn:

pcores-vcores-multiplier: '1.0'

system-reserved-memory-mb: '750'

recipes:

- tensorflow::default

- hadoop_spark::yarn

- hopslog::_filebeat-spark

- hopslog::_filebeat-services

- hops::nm

- kagent::default

- hopsmonitor::node_exporter

- hops::dn

- consul::slave

- livy::install

- conda::default

- flink::yarn

worker5:

size: 1

baremetal:

ips:

- 10.0.0.15

sudoPassword: ''

attrs:

cuda:

accept_nvidia_download_terms: 'false'

hops:

yarn:

pcores-vcores-multiplier: '1.0'

system-reserved-memory-mb: '750'

recipes:

- tensorflow::default

- hadoop_spark::yarn

- hopslog::_filebeat-spark

- hopslog::_filebeat-services

- hops::nm

- kagent::default

- hopsmonitor::node_exporter

- hops::dn

- consul::slave

- livy::install

- conda::default

- flink::yarn

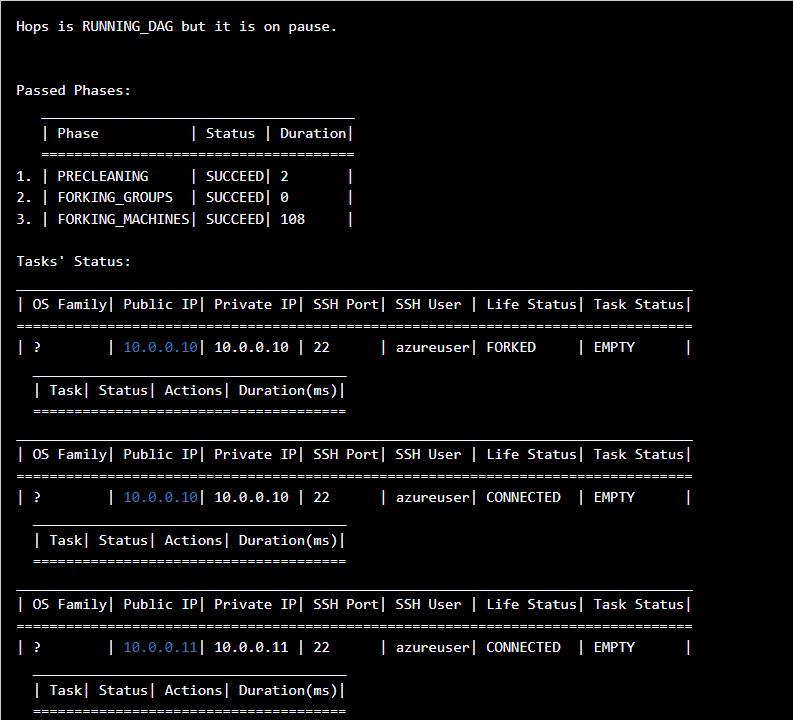

I am getting empty task status in all the node. I am using ubuntu 18.04. Its not installing any application. Kindly help

ERROR [2022-03-24 15:21:39,486] se.kth.karamel.backend.ClusterManager:

! se.kth.karamel.common.exception.DagConstructionException: Task 'find os-type on 10.0.0.10' already exist.

! at se.kth.karamel.backend.dag.Dag.addTask(Dag.java:50) ~[karamel-core-0.6.jar:na]

! at se.kth.karamel.backend.running.model.tasks.DagBuilder.machineLevelTasks(DagBuilder.java:334) ~[karamel-core-0.6.jar:na]

! at se.kth.karamel.backend.running.model.tasks.DagBuilder.getInstallationDag(DagBuilder.java:91) ~[karamel-core-0.6.jar:na]

! at se.kth.karamel.backend.ClusterManager.runDag(ClusterManager.java:344) ~[karamel-core-0.6.jar:na]

! at se.kth.karamel.backend.ClusterManager.run(ClusterManager.java:480) ~[karamel-core-0.6.jar:na]

! at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_312]

! at java.util.concurrent.FutureTask.run(FutureTask.java:266) [na:1.8.0_312]

! at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [na:1.8.0_312]

! at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [na:1.8.0_312]

! at java.lang.Thread.run(Thread.java:748) [na:1.8.0_312]

WARN [2022-03-24 15:21:39,486] se.kth.karamel.backend.ClusterManager: Got interrupted, perhaps a higher priority command is comming on..

INFO [2022-03-24 15:31:52,706] se.kth.karamel.backend.ClusterService: User asked for resuming the cluster 'Hops'