Hi all,

In december last year I opened a ticket (here: https://groups.google.com/forum/#!topic/hopshadoop/fVz3bOtEVUw) regarding the use of HopsworksSqoopOperator to move a generic table (the size is 13 KB) from MySQL to HDFS. You replied me that there was a bug of HopsFS and installing Hopsworks 1.1 (with Hops 2.8.2.9) the problem could be solved.

Now I’m using Hopsworks 1.2 (with Hops 2.8.2.9), and I’m trying to do the same.

This is the DAG:

import airflow

from datetime import datetime, timedelta

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from hopsworks_plugin.operators.hopsworks_operator import HopsworksSqoopOperator

delta = timedelta(minutes=-10)

now = datetime.now()

args = {

'owner': 'meb10000',

'depends_on_past': False,

'start_date': now + delta

}

dag = DAG(

dag_id = 'hopsworksSqoopOperator_test',

default_args = args

)

CONNECTION_ID = "test_hopsworks_jdbc"

PROJECT_NAME = "Test"

task1 = BashOperator(

task_id='Start',

dag=dag,

bash_command='echo "Start Sqooping"'

)

task2 = HopsworksSqoopOperator(task_id='sqoop_test_4',

dag=dag,

conn_id=CONNECTION_ID,

project_name=PROJECT_NAME,

table='activity',

target_dir='/Projects/Test/sqoop-import/activity',

verbose=False,

cmd_type='import',

driver="com.mysql.jdbc.Driver",

file_type='text'

)

task3 = BashOperator(

task_id='Finish',

dag=dag,

bash_command='echo "Finished Sqooping"'

)

task1 >> task2 >> task3

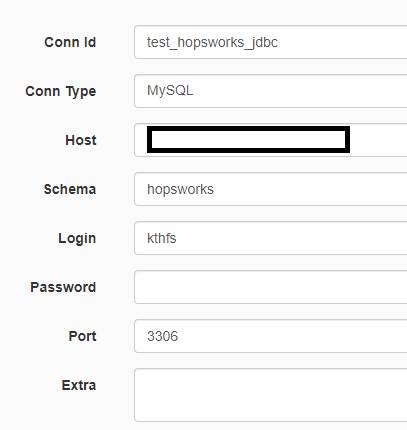

On airflow, I set “Connection” like that:

After starting the DAG, it fails:

1) I have this error on DAG log:

{models.py:1788} ERROR - Sqoop command failed: sqoop import -D yarn.app.mapreduce.am.staging-dir=/Projects/Test/Resources/.mrStaging -D yarn.app.mapreduce.client.max-retries=10 --username kthfs --password MASKED --connect MY_DB_IP:3306/hopsworks --target-dir /sqoop-import/activity --as-textfile --driver com.mysql.jdbc.Driver --table activity

Traceback (most recent call last):

File “/opt/hops/anaconda/anaconda/envs/airflow/lib/python3.6/site-packages/airflow/models.py”, line 1657, in _run_raw_task

result = task_copy.execute(context=context)

File “/opt/hops/airflow/plugins/hopsworks_plugin/operators/hopsworks_operator.py”, line 406, in execute

super(HopsworksSqoopOperator, self).execute(context)

File “/opt/hops/anaconda/anaconda/envs/airflow/lib/python3.6/site-packages/airflow/contrib/operators/sqoop_operator.py”, line 218, in execute

extra_import_options=self.extra_import_options)

File “/opt/hops/anaconda/anaconda/envs/airflow/lib/python3.6/site-packages/airflow/contrib/hooks/sqoop_hook.py”, line 232, in import_table

self.Popen(cmd)

File “/opt/hops/anaconda/anaconda/envs/airflow/lib/python3.6/site-packages/airflow/contrib/hooks/sqoop_hook.py”, line 116, in Popen

raise AirflowException(“Sqoop command failed: {}”.format(masked_cmd))

airflow.exceptions.AirflowException: Sqoop command failed: sqoop import -D yarn.app.mapreduce.am.staging-dir=/Projects/Test/Resources/.mrStaging -D yarn.app.mapreduce.client.max-retries=10 --username kthfs --password MASKED --connect MY_DB_IP:3306/hopsworks --target-dir /sqoop-import/activity --as-textfile --driver com.mysql.jdbc.Driver --table activity

{models.py:1819} INFO - Marking task as FAILED.

2) I have this error on hadoop.log:

ERROR org.apache.sqoop.manager.SqlManager: Error executing statement: java.sql.SQLException: No suitable driver found for MY_DB_IP:3306/hopsworks

java.sql.SQLException: No suitable driver found for MY_DB_IP:3306/hopsworks

at java.sql.DriverManager.getConnection(DriverManager.java:689)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.sqoop.manager.SqlManager.makeConnection(SqlManager.java:904)

at org.apache.sqoop.manager.GenericJdbcManager.getConnection(GenericJdbcManager.java:59)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:763)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:786)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:289)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:260)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:246)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:327)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1872)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1671)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:501)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

ERROR org.apache.sqoop.tool.ImportTool: Import failed: java.io.IOException: No columns to generate for ClassWriter

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1677)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:501)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Could you help me to find the problem?

Thanks a lot,

Antony